Welcome to my blog on Kubernetes Security which is a 2-week blog that covers some Theoretical and Practical Concepts/Topics on Kubernetes Security

Day 1: Kubernetes & Container Security

Overview of Containers

- Containers are transforming modern application infrastructure.

- Understanding Containers, Docker, and Kubernetes is required to build modern cloud-native apps and also to modernize the existing legacy applications.

Background information

Scenario

- Imagine you are building a web service on a Ubuntu machine and your code works as expected on your local machine.

- You attempt to run the same code on a remote server in your data centre by copying the local binaries, but it fails to work.

- The failure to run the code on the remote server can be due to several reasons such as differences in the operating system, missing required binaries, libraries and files, or incompatible software installed on the remote server (e.g. a different version of Python or Java interpreter).

- This scenario highlights the challenges of running code in a different environment and the high importance of ensuring that the necessary dependencies and configurations are properly managed.

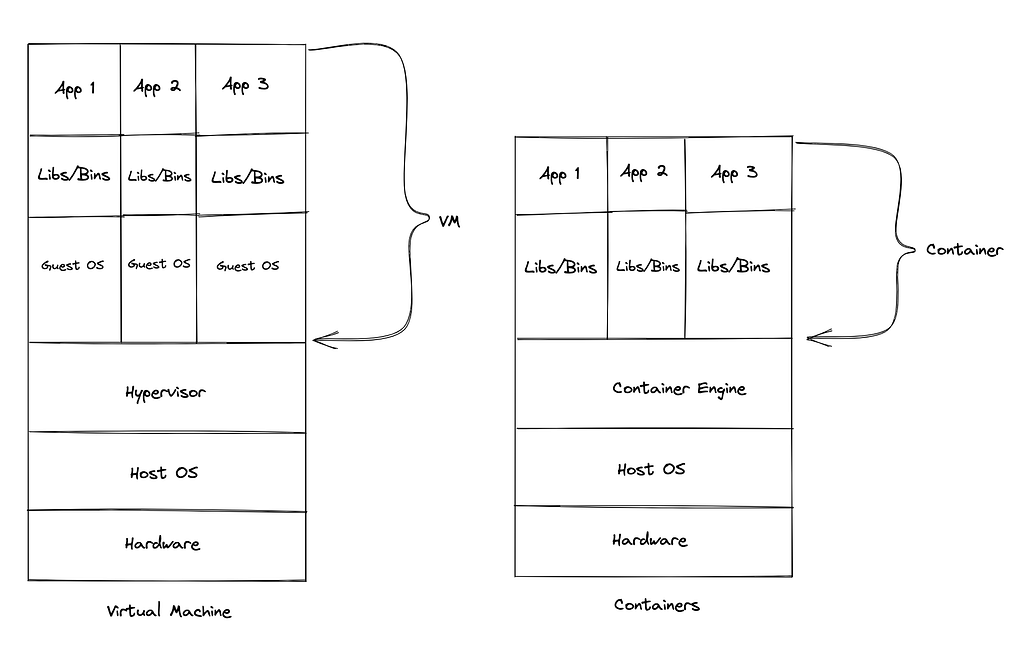

Virtualization Facilitates running multiple OS on a single computer

Virtualization

- Virtualization refers to running a virtual version of an operating system using Hypervisor.

- Each VM requires its Operating system which increases storage and memory overhead while running the VM. As a result, Virtual Machines became heavyweight & increased the system’s complexity.

- To overcome these problems, virtualization was introduced at the Operating System level called “Containerization”.

Definition of Container

- A container can be thought of as a self-contained unit of software. It contains all the necessary components of a project or service, including dependencies.

- The purpose of this encapsulation is to keep the contents isolated from the host system, ensuring that any changes made within the container will not affect the host.

- By using containers, you can run a single service or an entire development environment in an isolated and streamlined manner.

Docker and Containers

Docker can be thought of as a tool for managing containers, while containers provide an isolated environment for running applications.

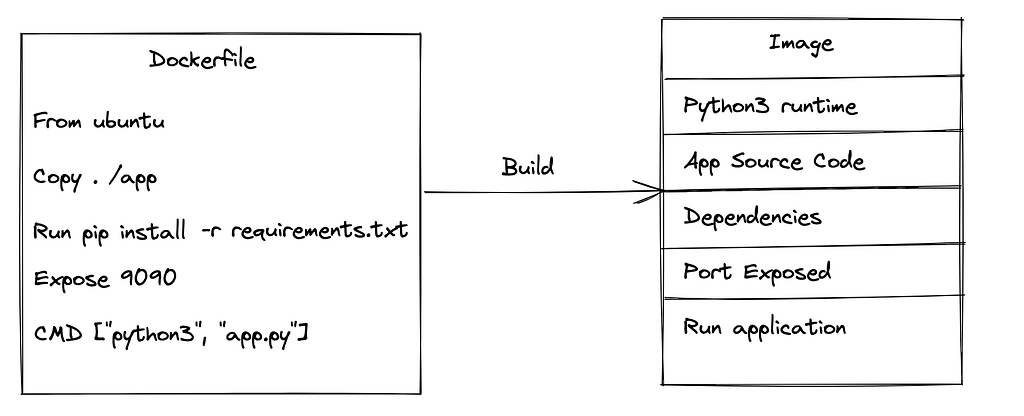

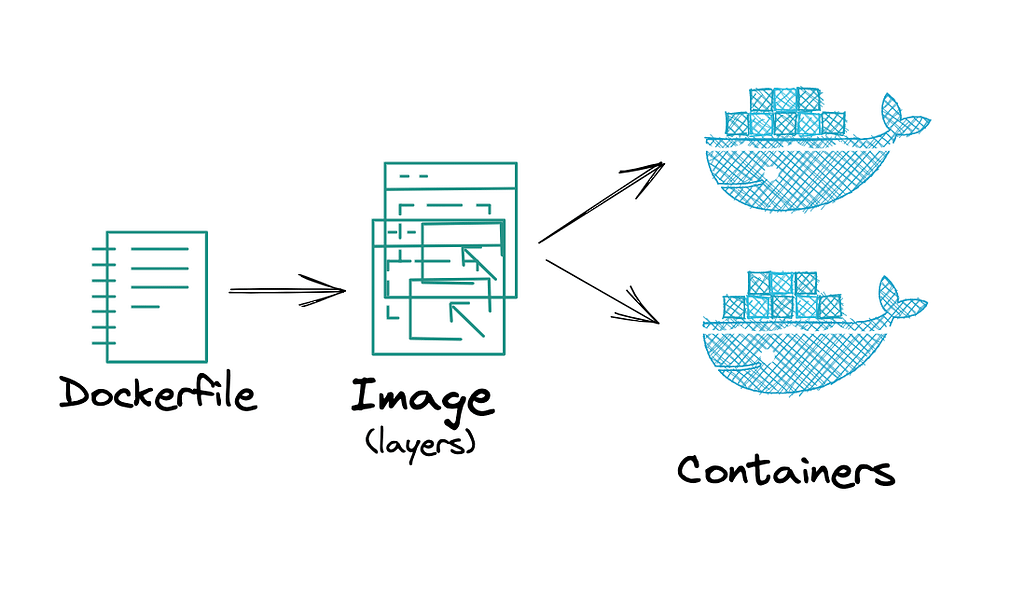

Building Container Images

Images in Containers:

- Images are created by following a series of instructions provided in the Dockerfile.

- During the image-building process, each command creates a layer that contributes to the final image.

- The final layer determines the command that should be executed once a container is initiated.

- Images do not necessarily need to be stored or built on just your local machine.

- Containers are meant to be deployable anywhere and thus we should be able to access our images from any physical machine.

- Registries are used to remotely store and access images, privately as well as publicly.

Docker images vs. Docker Containers

- A Docker image acts as a blueprint or template, while a Docker container is an active instance of that blueprint.

- To create an image with your application’s code, you create a text file named Dockerfile that lists the necessary commands. The Docker builder uses this file to build the image. After building the image, you can store it in a container registry for version control.

- To run a Docker image, you must either build it locally or retrieve it from a registry.

DockerHub is a widely-used public registry, but private option like Amazon Elastic Container Registry and Azure Container Registry are also available to keep your images secure.

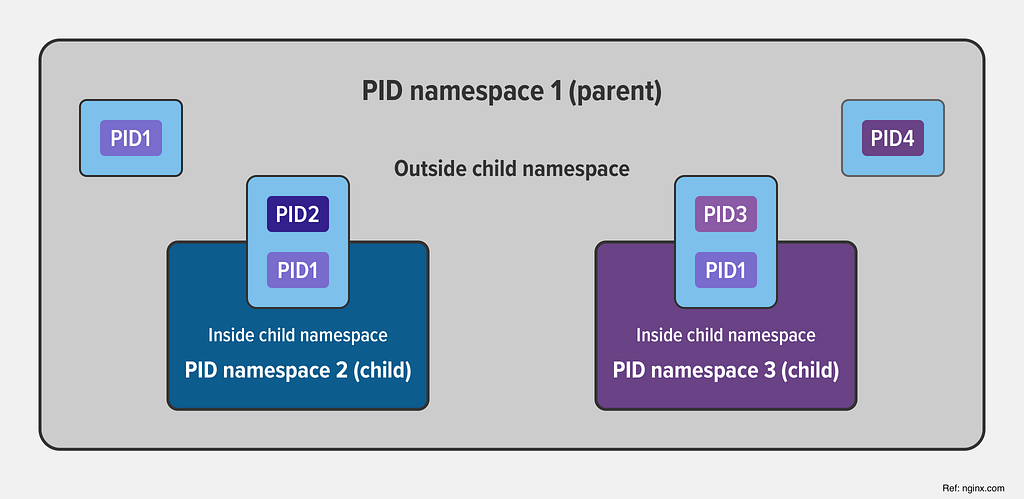

Control Groups and Namespaces

- Control Groups (cgroups): Control groups are used to limit, prioritize, and allocate resources such as CPU, memory, and 1/0 bandwidth to a group of processes. They allow for fine-grained control over the distribution of resources in a Linux system, making it possible to ensure that one group of processes does not interfere with others.

- Namespaces: Namespaces are used to provide an isolated view of the system resources to a group of processes. Each namespace provides a separate view of the system, including process IDs, network interfaces, file systems, and other resources. This isolation ensures that changes made within a namespace do not affect the rest of the system.

- Additionally, the idea of namespace isolation was incorporated into cgroups. This was similar to the already existing process isolation feature. The namespace isolation ensures isolation between processes in the cgroup namespaces.

- PID namespace: Processes in one namespace are not aware of processes in another namespace

- Mount namespace: Process in one namespace can’t access the filesystem mounted in another namespace

- User namespace: User processes can have certain privileges within a namespace but may have different privileges outside that namespace.

- Interprocess communication (IPC) namespace: IPCs handle the communication between processes by using shared memory areas, message queues, and semaphores (used mostly in DBs). When a program needs to store information for a short time, it asks the operating system to reserve a certain amount of random access memory (RAM) to its process.

Most Common used linux namespaces: PID, Mount(MNT), User, Net, Unix Timesharing System(uts namespace), User, IPC.

Advantages of Using Containers

- Ease of deployment is the biggest advantage of containers as they are easily deployable on a variety of systems, due to their isolation.

- Run multiple services on one machine

- Isolation from the host operating system prevents unwanted user access to the host’s file system

- Containers are extremely lightweight in memory requirements that virtual machines

Container Orchestration

- Container Orchestration is the automation of the operational efforts like deployment, management, scaling and networking required to run containerized workloads. Scalability, Reliability & Operability are the three cornerstones of any distributed system.

- Container Orchestration tools provide a way for managing containers and microservices architecture

Kubernetes

- Kubernetes is an orchestration engine that solves the problems associated with deploying and scheduling and simplifies the management of containers in the cloud.

- Kubernetes is backed by Google’s experience of running workloads at huge scale in production over the past 15 years.

This is just the beginning of a long cruise sail……… Be Ready for this exciting journey

Day 2 Introduction To Container Security

Container Security

- A Single Container can contain multiple vulnerabilities, which can lead to security incidents.

- Securing Containers requires a continuous security strategy that must be integrated into the entire software development process.

- This includes securing the build pipeline, the container images, the machines hosting the containers, the runtime systems(such as Docker or Containerd), the container platform, and the application layers.

Importance of Container Security

The security of containers is crucial as the images holds all the components that will rin the application.

- Risk of Vulnerabilities: The presence of vulnerabilities in the container image increases the risk and potential harm of security issues during production.

- Monitoring Production: To minimize these risks it is essential to monitor production.

- Building Secure Images: Creating images without vulnerabilities or elevated privileges can help improve security.

- Monitoring Runtime: Despite having secure images, it is still necessary to monitor what is happening during runtime.

- Essential for Safe Deployments: Ensuring the security of containers is a critical aspect of safe and successful deployments.

- Protecting Data: Securing containers can help protect sensitive data and prevent unauthorized access.

- Maintaining Trust: Maintaining the security of containers is important in building and maintaining the trust of customers, stakeholders, and partners.

Container Security Best Practices

- Securing Images: Containers are created using container images, thus the attack surface can be minimized by including only essential application code and dependencies in the image and removing any tools or libraries that are not required. Also, always trusted images should be used.

- Securing Registries: Implement security controls for a private container registry to protect images. Ensure integrity and establish strict access control.

- Securing Deployment: Ensure the target environment is secure by hardening the operating system, setting up VPC, security groups, and firewall rules, and restricting access to container resources.

- Automated Testing: Use automated testing via Clair, anchor to detect vulnerabilities in the code and environment before deployment.

- Continuous Monitoring: Continuously monitor containers, host systems, and the environment for security threats and vulnerabilities.

Practicals on Docker

Ladies and Gentlemen get ready prep yourself to explore Docker Playground

Understanding Docker Layers

Docker Layers:

A Docker build consists of a series of ordered build instructions, docker layers are files that result from executing a command. Layers offer the benefit of being reusable by multiple images, saving disk space and reducing the time it takes to build images, while still preserving their integrity.

Basics of Dockerfile:

- A Dockerfile is a plain text file that contains instructions to build an image.

- The Dockerfile is essential as it specifies what should be downloaded, the arguments that need to be run after building the image, and how to configure the image.

- By executing the same steps repeatedly, Dockerfile can be used to create clean images that are consistent across multiple builds.

Demo Using Dockerfile?

- A Dockerfile is a text file that contains a set of instructions for building a Docker image. Each instruction in the Dockerfile provides a step in the image-building process.

- Typical Dockerfile:

FROM httpd:latest

LABEL maintainer="Security Dojo<namaste@securitydojo.co.in>"

LABEL version="1.0"

LABEL description="This is a sample Docker image."

EXPOSE 80

- Explanation of Dockerfile:

- FROM—The base image can be Ubuntu, Redis, MySQL, etc.

- LABEL—Labeling like EMAIL, AUTHOR, etc.

- EXPOSE – The expose keyword in a Dockerfile tells Docker that a container listens for traffic on the specified port.

- The instructions in a Dockerfile are executed in order from top to bottom. Each instruction creates a new layer in the image, which is cached and can be reused in subsequent builds if the Dockerfile has not changed.

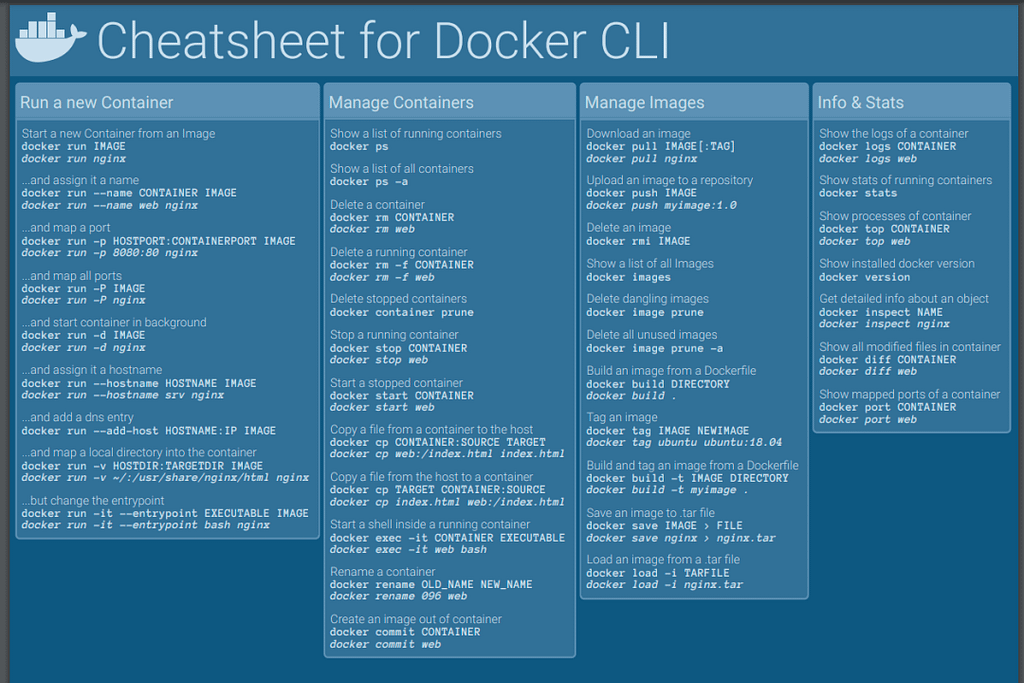

Demo on Docker commands and their usage

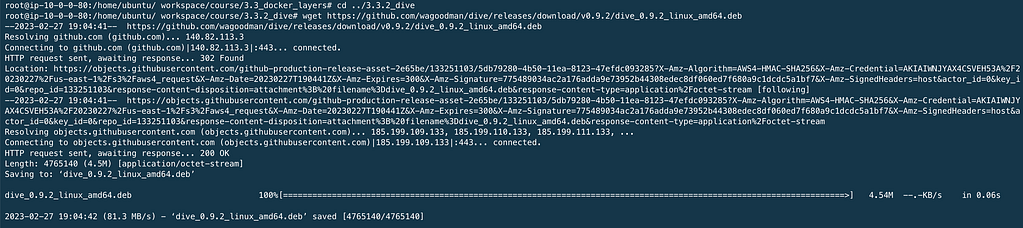

Day 3: Lab: Using Dive For Secret Exfiltration

Lab Scenario

The Lab Scenario uses the open-source Dive tool for layer analysis.

Lab Pre-requisites:

- Ubuntu Server.

- Install Docker.io and Docker Compose.

- Deploy Portainer (This is an optional step).

- Tool: Dive

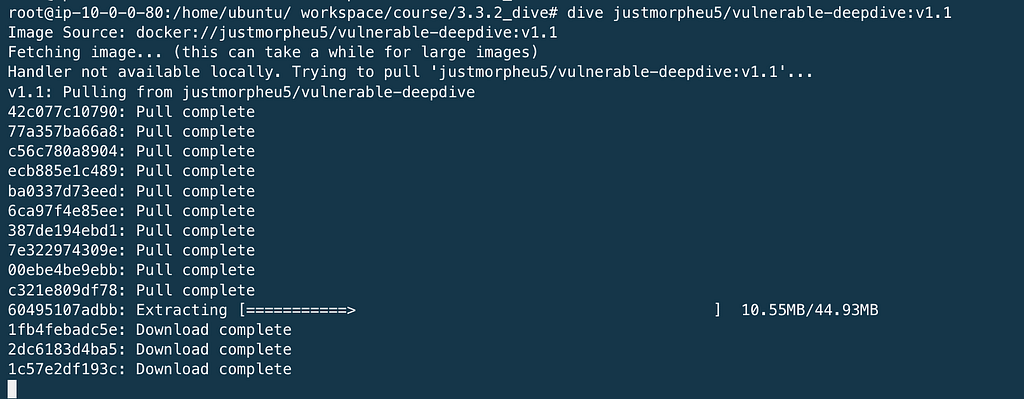

Commands mkdir 3.3.2_dive cd 3.3.2_dive wget https://github.com/wagoodman/dive/releases/download/v0.9.2/dive_0.9.2_linux_amd64.deb

sudo ap install ./dive_0.9.2_linux_amd64.deb && rm dive_0.9.2_linux_amd64.deb

rm dive_0.9.2_linux_amd64.deb will delete the binary after installation

Run Dive to download the Docker image. By default, the latest tag will be downloaded.

By default latest tag is used when pulling the image from the docker repository instead we need to use v1.1 tag

Dive justmorpheu5/vulnerable-deepdive:v1.1

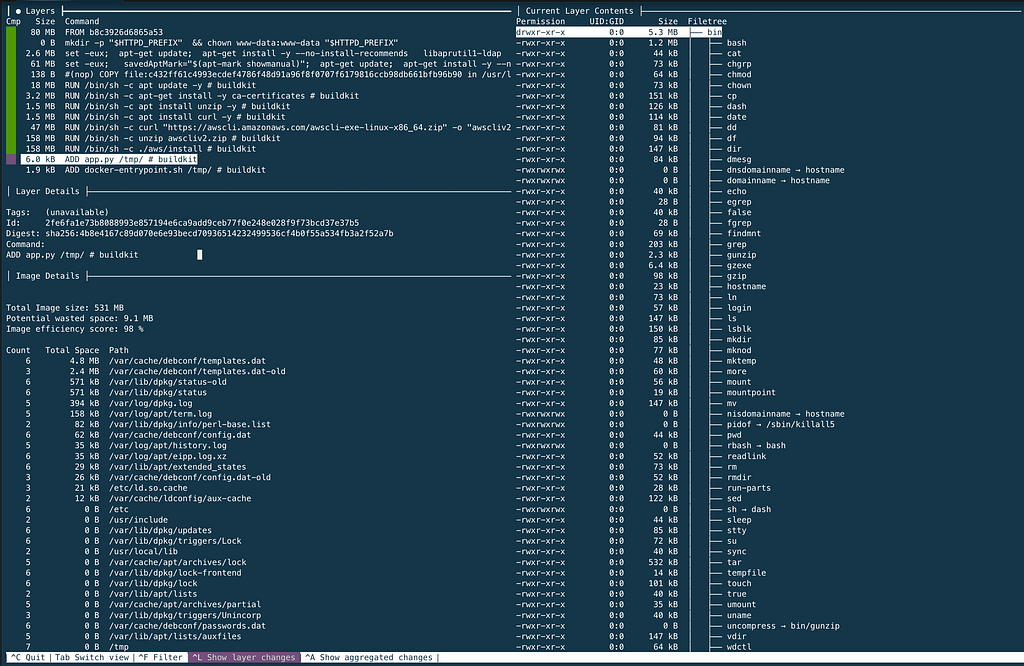

Analyze each layer of the Docker image. The app.py file can be viewed by navigating using the arrow key, on the 12th layer.

Press ctrl+ c to exit from dive

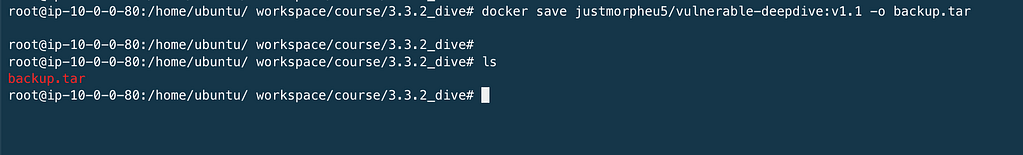

Let’s save the Docker image as a backup.tar file.

docker save justmorpheus5/vulnerable-deepdive:v1.1 -o backup.tar

ls

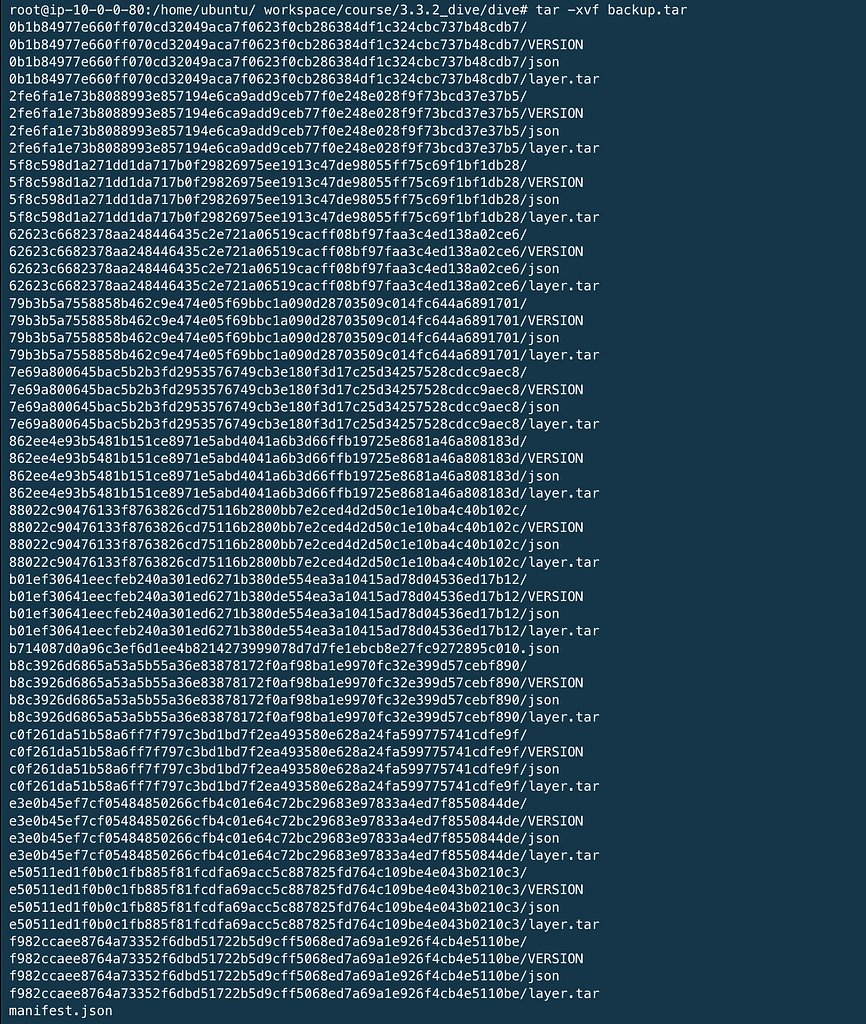

Extract the tar file using the tar command.

mkdir dive && mv backup. tar dive && cd dive && Is

tar -xvf backup.tar

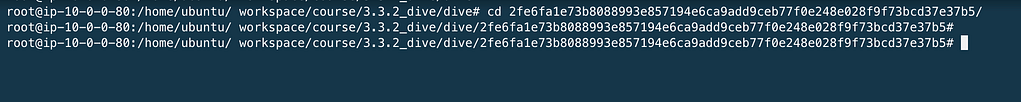

Navigate to the extracted folder starting with

2fe6fa1e73b8Ø88993e857194e6ca9add9ceb77føe248eØ28f9f73bcd37e37b5/

cd 2fe6fa1e73b8Ø88993e857194e6ca9add9ceb77føe248eØ28f9f73bcd37e37b5/

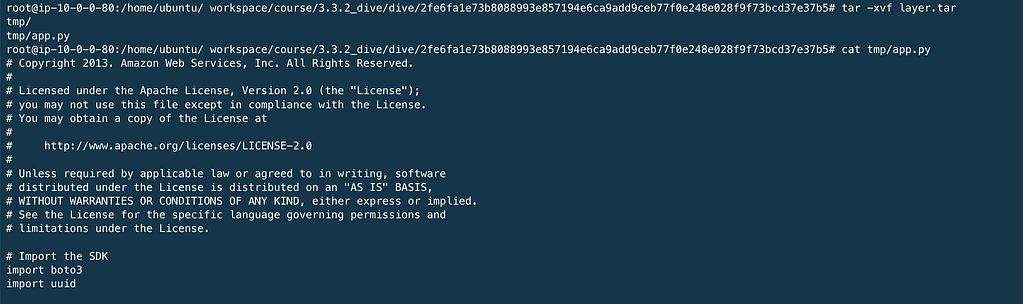

Extract the layer.tar file and display the app.py file.

tar -xvf layer. tar

cat tmp/app.py

now check the file for AWS Credentials, AWS_ACCESS_KEY_ID & AWS_SECRET_ACCESS_KEY

The content is good but only through practical way will be understood properly.

Got the credentials.. waiting for the next blog ..

i am waiting for next blog